After two years of careful analysis, the World Health Organization (WHO) has weighed in on the ethics and governance of artificial intelligence in health care—and it’s not a moment too soon.

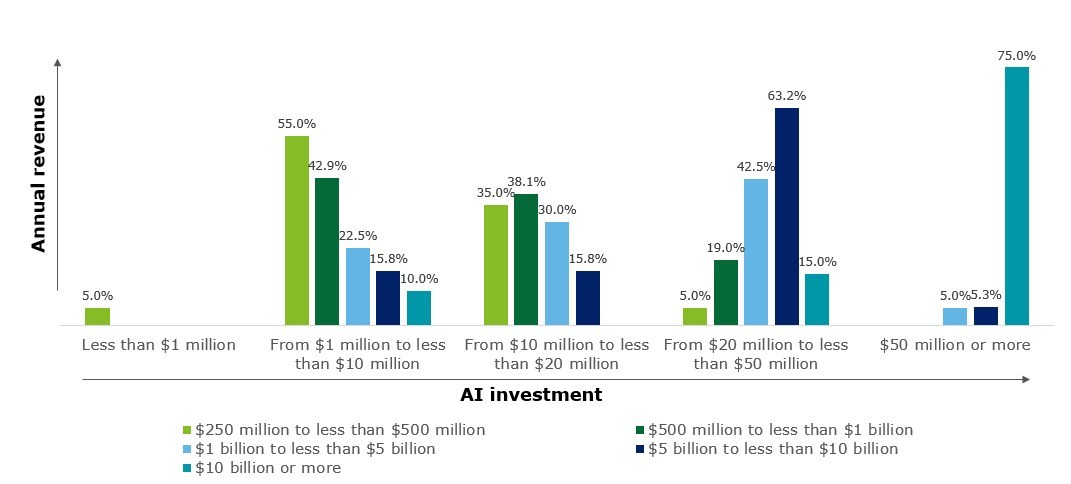

Adoption of AI systems in health care has gone up significantly in the last few years. According to a survey from Deloitte, 73 percent of all health care organizations increased their AI funding in 2020. Moreover, 75 percent of large organizations (annual revenue of more than $10 billion) invested more than $50 million in AI projects or technologies.

Organizations with higher annual revenue invest more heavily in AI. Note: Total number of respondents, N=120 (US=87, other global regions=33). Source: Deloitte’s State of AI in the Enterprise, 3rd Edition survey

Another report, from KPMG, found that AI adoption accelerated during the pandemic across all sectors, particularly in health care. Both health care and life sciences leaders have bought into its ability to monitor the spread of COVID-19 cases (94 percent and 91 percent of respondents), and help with vaccine development (90 percent and 94 percent) and distribution (90 percent and 88 percent), respectively.

“Artificial intelligence holds enormous potential for improving the health of millions of people around the world, but like all technology it can also be misused and cause harm,” said Tedros Adhanom Ghebreyesus, MD, WHO Director-General in a statement.

WHO’s report is bullish on AI, crediting the technology with the ability to improve the speed and accuracy of diagnosis and screening for diseases, assist with clinical care, strengthen health research and drug development, and support diverse public health interventions. However, WHO also cautions against relying too much on the technology and advises health care organizations to be wary of unethical collection and use of health data and biases encoded in the algorithms.

WHO shared six principles to guide AI adoption in health care:

- Protecting human autonomy: Ensuring that humans remain in control of health care systems and medical decisions and protecting privacy and confidentiality.

- Promoting human wellbeing and safety and the public interest. Satisfying regulatory requirements for safety, accuracy and efficacy for well-defined use cases or indications.

- Transparency. Organizations must publish data on the design or deployment of an AI technology before its launched.

- Fostering responsibility and accountability. Only using AI for the appropriate conditions and by appropriately trained people.

- Ensuring inclusiveness and equity. Organizations should design AI to encourage the widest possible equitable use and access, irrespective of age, sex, gender, income, race, ethnicity, sexual orientation, ability or other characteristics protected under human rights codes.

- Promoting AI that is responsive and sustainable. Designers, developers and users should continuously and transparently assess AI applications during actual use to determine whether AI responds adequately and appropriately to expectations and requirements.

AI uses cases during COVID

Across health care, COVID was a spark for AI adoption in numerous forms. Mark Ziemianski of Children’s Health in Dallas says that the AI implementation within his organization was born out of necessity during the pandemic. The hospital wasn’t sure it could get personal protective equipment (PPE) and COVID tests to its workers in a sufficient time.

“It forced us to manage our supplies. We learned very quickly we were going to have to do a little bit better than manage the existing supplies, we were going to have to forecast the usage,” says Ziemianski.

The hospital built a couple data models using AI, which allowed them to look at five years of operating room cases by procedure and identify who was in the room at the time and the equipment they would likely need. “As cases were scheduled, we could forecast the need against the inventory that we had on hand. That allowed us to be able to carefully maintain inventory so we wouldn’t run out or if we were going to run out, we could reach out to vendors,” he says.

Later in the pandemic, Children’s Health used the AI forecasting tool to manage vaccine supply and demand among the hospital’s 35,000 employees. The health system has since expanded the use of the AI tool into operational purposes, such as managing capacity for how many kids are in the emergency department.

Parkview Medical Center is a smaller health care organization, as a non-profit, independent community hospital based in Pueblo, Colorado with 260 acute-care beds and approximately 2,700 employees. Like Children’s, Parkview leveraged AI to manage capacity, which is of particular importance during COVID for a hospital that has a limited number of beds across a large service area that stretches into New Mexico.

Sandeep Vijan, MD, Vice President of Medical Affairs and Quality and CMO of Parkview, says the organization used natural language processing, predictive modeling, machine learning technology to garner insights from the EHR, structured or unstructured, to help providers identify discharge barriers throughout the patient’s stay. Using this tool helped reduce excess length of stay by 88 percent and ensured the small hospital didn’t run out of beds during COVID. Vijan says the complex challenges of today’s health system require these kinds of data-driven solutions.